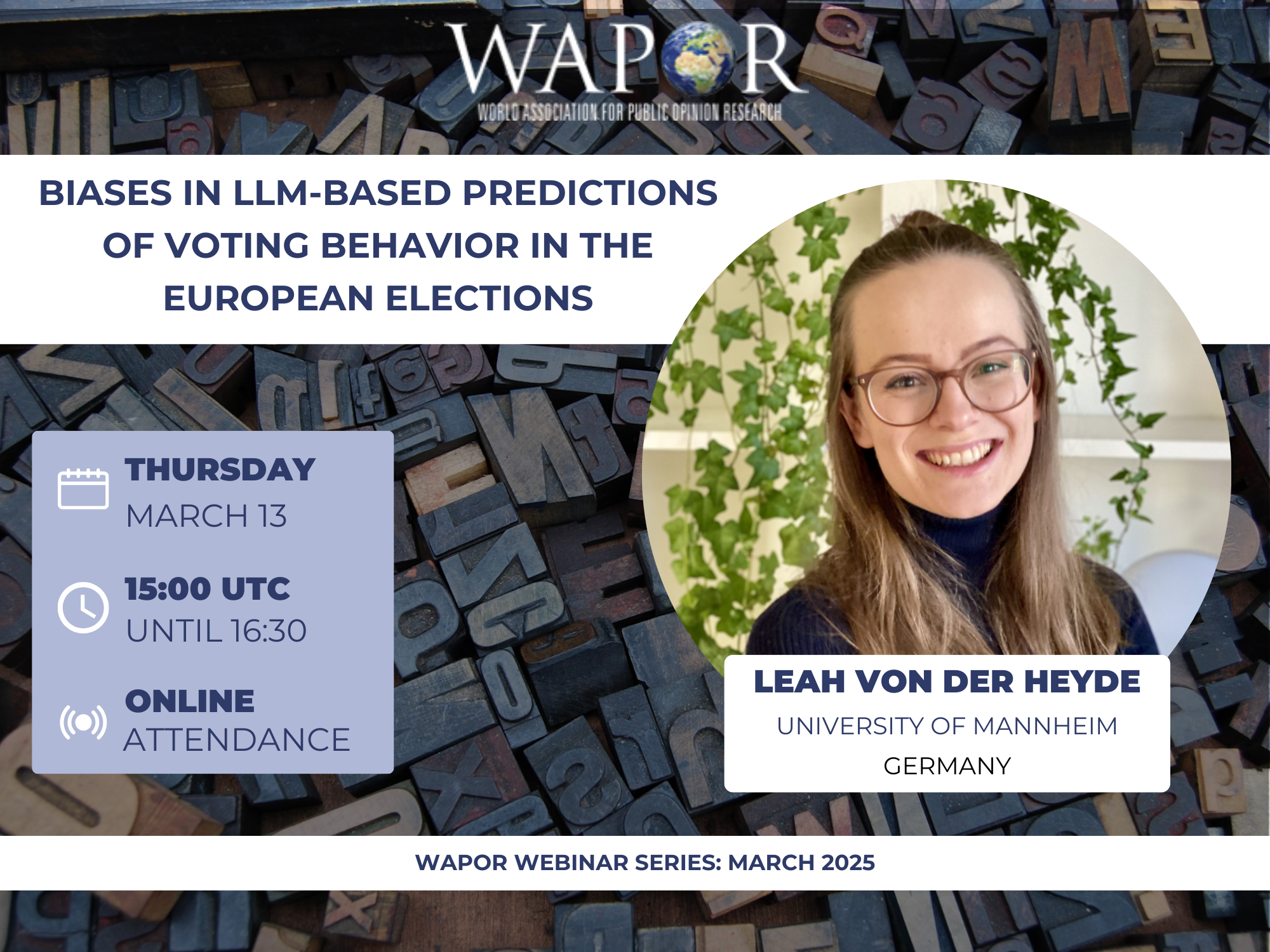

Biases in LLM-Based Predictions of Voting Behavior in the European Elections

Join us this Thursday, March 13, at 15:00 UTC, for our webinar on biases on LLM-Based predictions on Voting Behavior, on the case of the European Elections with PhD candidate Leah Von der Hyde, from the University of Manheim. Large Language Models (LLMs) have become an increasingly significant tool in political research, particularly in analyzing and forecasting electoral behavior. Yet, while these methodologies are indeed a powerful tool for researchers, they also introduce systematic biases that can shape interpretations of voter preferences. This webinar will explore how these biases manifest in predictive models and what this means for the study of elections and Public Opinion.

It has been proposed that “synthetic samples” based on large language models (LLMs) could serve as efficient alternatives to surveys of humans, considering LLM outputs are based on training data that includes information on human attitudes and behavior. This might be especially helpful for challenging tasks in public opinion research, such as election prediction. However, LLM-synthetic samples might exhibit bias, for example due to training data and alignment processes being unrepresentative of diverse contexts. Such biases risk reinforcing existing biases in research, policymaking, and society. Therefore, it is important to investigate if and under which conditions LLM-generated synthetic samples can be used for public opinion prediction.

This webinar presents research examining the extent of context-dependent biases in LLM-based predictions of individual public opinion by predicting the results of the 2024 European Parliament elections – and a preprint containing partial results of the project presented in the webinar can be accessed in advance here.

Before the elections took place, three LLMs were asked to predict the individual voting behavior of 26,000 eligible European voters from all 27 member states. They were prompted with each person’s individual-level background information, while varying input language and the amount of information provided in the prompt. By comparing the LLM-based predictions to the actual results, the study shows that:

(1) LLM-based predictions of future voting behavior based on past training and survey data largely fail;

(2) their accuracy is unequally distributed across national and linguistic contexts;

(3) it is currently infeasible to repurpose or supplement limited individual-level survey data with off-the-shelf LLM-based synthetic data.

The findings emphasize the limited applicability of LLM-synthetic samples to public opinion prediction.

At the end of the webinar, attendees will have gained a high-level understanding of LLMs’ inner workings and the logic behind LLM-based synthetic samples, their purpose and implementation, as well as their potential pitfalls.

Presentation slides: WAPOR_Webinar_lvdh

Leah von der Heyde is a research associate at the Social Data Science and AI Lab (SODA) at LMU Munich and a final-year PhD candidate at the University of Mannheim. Her work centers around improving the understanding of public opinion using AI, digital traces, and social media, while identifying and mitigating biases. Leah is passionate about data justice – giving a voice to those groups typically misrepresented in survey and online data. Substantively, she is particularly interested in political attitudes and voting behavior. Leah has a background in Political Science from LMU, the University of Mannheim, and Georgetown University, with specializations in political attitudes, survey research, and quantitative methods. Previously, Leah worked for the European Social Survey at GESIS – Leibniz Institute for the Social Sciences, the European Parliamentary Research Service, and several market and public opinion research institutes in Germany and Sweden.